: Egocentric Task Verification from Natural Language Task Descriptions

: Egocentric Task Verification from Natural Language Task Descriptions

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

: Egocentric Task Verification from Natural Language Task Descriptions

: Egocentric Task Verification from Natural Language Task Descriptions

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| TLDR: We introduce synthetic EgoTV and real-world CTV datasets for advancing egocentric agents. Given: (1) natural language task description, and (2) (egocentric) video of agent performing the task, Objective: to determine if the task was executed correctly based on description. The datasets feature multi-step tasks with diverse complexities. We also propose a novel Neuro-Symbolic Grounding (NSG) approach that outperforms SOTA vision-language models in causal, temporal, and compositional reasoning. |

|

To enable progress towards egocentric agents capable of understanding everyday tasks specified in natural language, we propose a benchmark and a synthetic dataset called Egocentric Task Verification (EgoTV). The goal in EgoTV is to verify the execution of tasks from egocentric videos based on the natural language description of these tasks. EgoTV contains pairs of videos and their task descriptions for multi-step tasks -- these tasks contain multiple sub-task decompositions, state changes, object interactions, and sub-task ordering constraints. In addition, EgoTV also provides abstracted task descriptions that contain only partial details about ways to accomplish a task. Consequently, EgoTV requires causal, temporal, and compositional reasoning of video and language modalities, which is missing in existing datasets. We also find that existing vision-language models struggle at such all round reasoning needed for task verification in EgoTV. Inspired by the needs of EgoTV, we propose a novel Neuro-Symbolic Grounding (NSG) approach that leverages symbolic representations to capture the compositional and temporal structure of tasks. We demonstrate NSG's capability towards task tracking and verification on our EgoTV dataset and a real-world dataset derived from CrossTask (CTV). We open-source the EgoTV and CTV datasets and the NSG model for future research on egocentric assistive agents. |

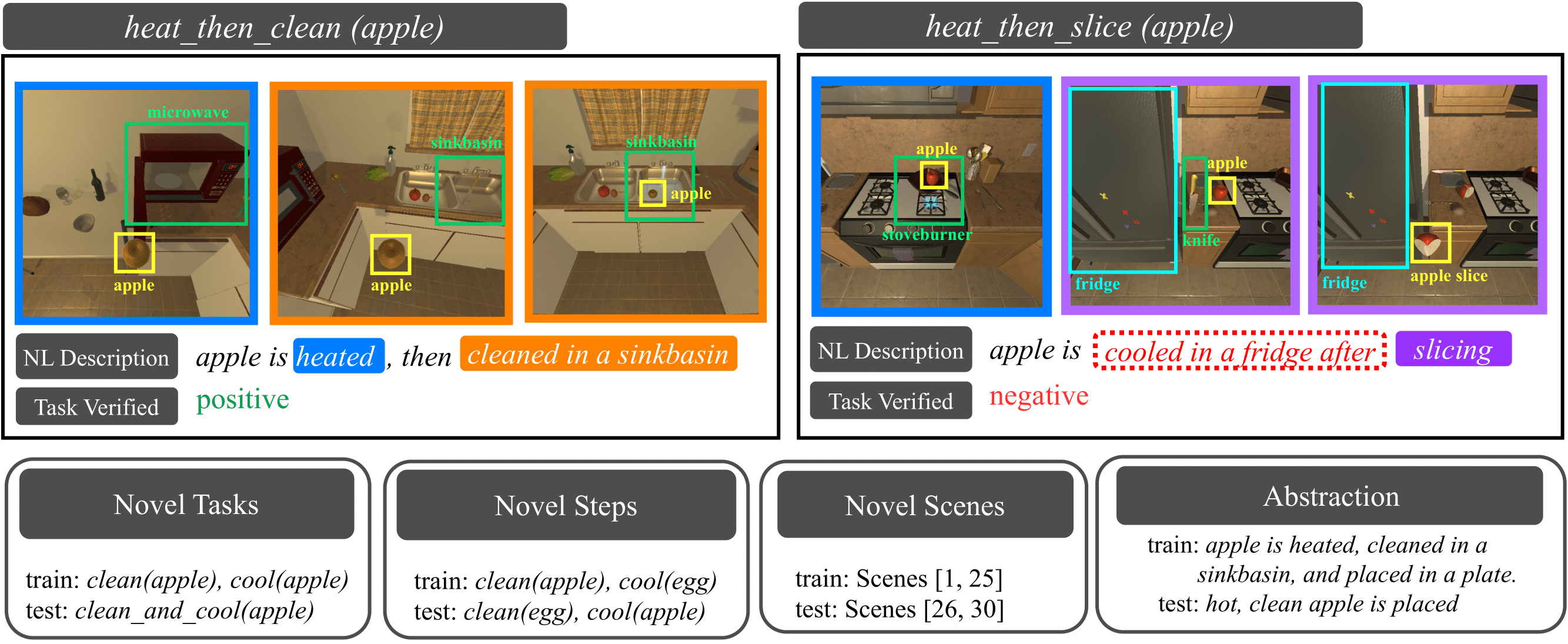

Figure 1. EgoTV dataset. A positive example [Left] and a negative example [Right] from the train set along with illustrative examples from the test splits [Bottom] of EgoTV are shown. The test splits are focused on generalization to novel compositions of tasks, unseen sub-tasks or steps and scenes, and abstraction in NL task descriptions. The bounding boxes are solely for demonstration purposes and are not used during training/inference. |

|

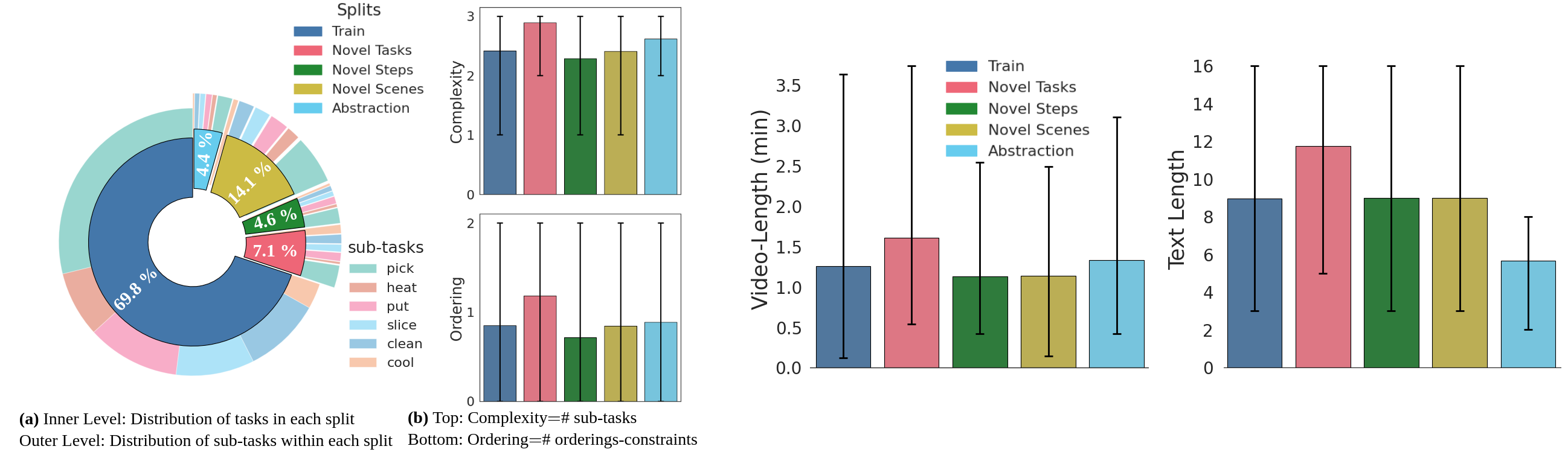

Figure 2. EgoTV dataset Stats |

|

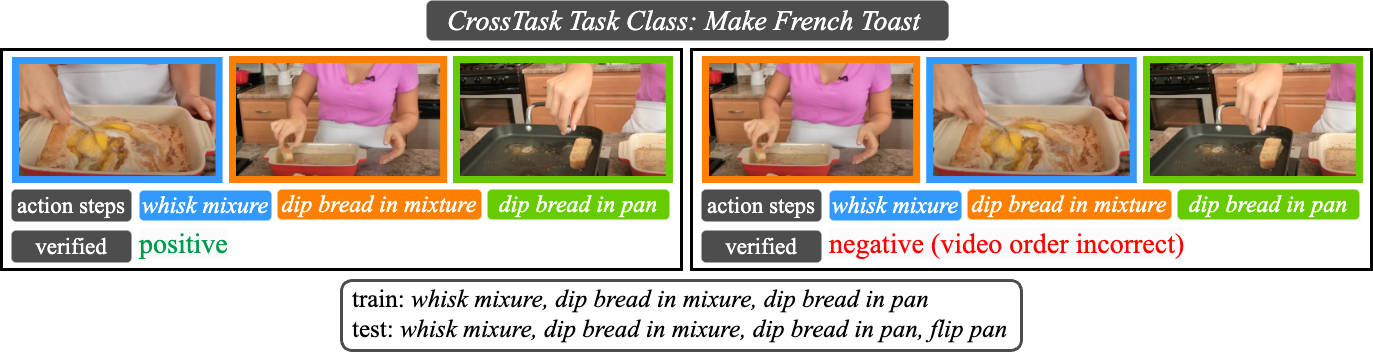

Figure 3. CrossTask Verification (CTV) dataset |

| We introduce CrossTask Verification (CTV) dataset, using videos from the CrossTask dataset to evaluate task verification models on real-world videos. Thus, CTV complements EgoTV dataset -- CTV and EgoTV together provide a solid test-bed for future research on task verification. CrossTask has 18 task classes, each with ~ 150 videos, from which we create ~ 2.7K samples. |

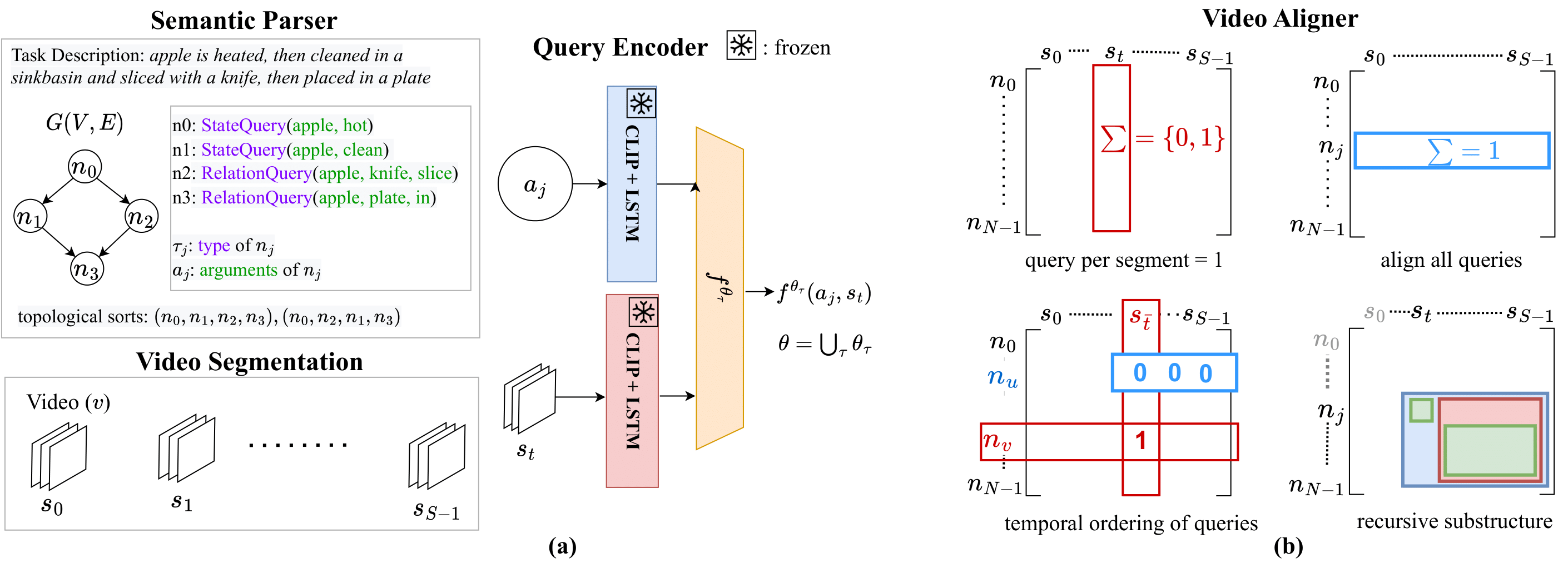

Figure 4. NSG Model (a) Semantic Parser and Query Encoder. (b) Video Aligner. |

| EgoTV requires visual grounding of task-relevant entities such as actions, state changes, etc. extracted from NL task descriptions for verifying tasks in videos. To enable grounding that generalizes to novel compositions of tasks and actions, we propose the Neuro-symbolic Grounding (NSG). NSG consists of three modules: (a Left) semantic parser, which converts task-relevant states from NL task descriptions into symbolic graphs, (a Right) query encoders, which generate the probability of a node in the symbolic graph being grounded in a video segment, and (b) video aligner, which uses the query encoders to align these symbolic graphs with videos. NSG thus uses intermediate symbolic representations between NL task descriptions and corresponding videos to achieve compositional generalization. |

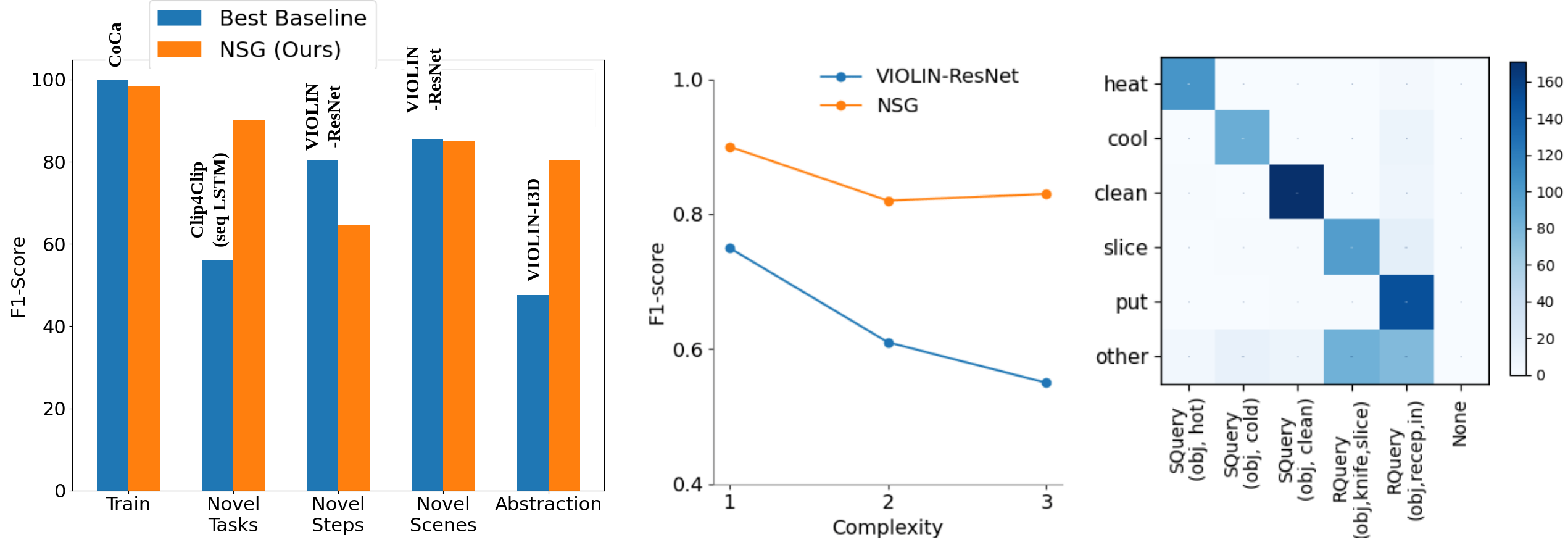

Figure 5. [Left] Comparison of baselines with NSG on different data splits using F1-score. [Middle] F1-score of NSG vs. best-performing baseline for EgoTV tasks with varying complexity averaged over all splits. [Right] Confusion Matrix for NSG Queries on validation split (SQuery: StateQuery, RQuery: RelationQuery). |

|

|

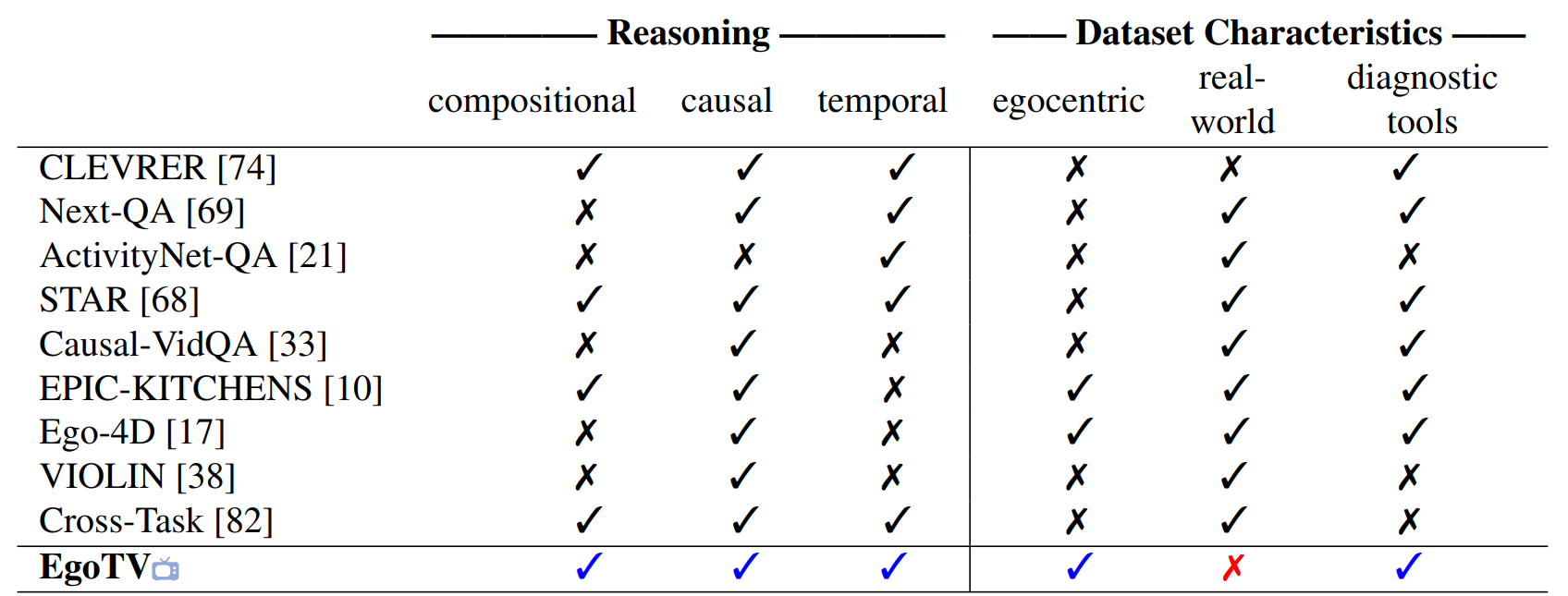

EgoTV vs. existing video-language datasets. EgoTV benchmark enables systematic investigation (diagnostics) on compositional, causal (e.g., effect of actions), and temporal (e.g., action ordering) reasoning in egocentric settings. |

|

|

|

R. Hazra, B. Chen, A. Rai, N. Kamra, R. Desai |