Fusing LLM & Classical Planning

In the previous part (Why are LLMs required for Planning?), the question we asked was: Can we improve LLM planning to have some formal guarantees?

To answer that, Let’s first recap.

| LLM Planning | Classical Planning | |

|---|---|---|

| Open-world Planning | ✅ | ❌ |

| Handling Abstract Tasks | ✅ | ❌ |

| Handling Partial Observability | ✅ | ❌ |

| Feasibility | ❌ | ✅ |

| Optimality | ❌ | ✅ |

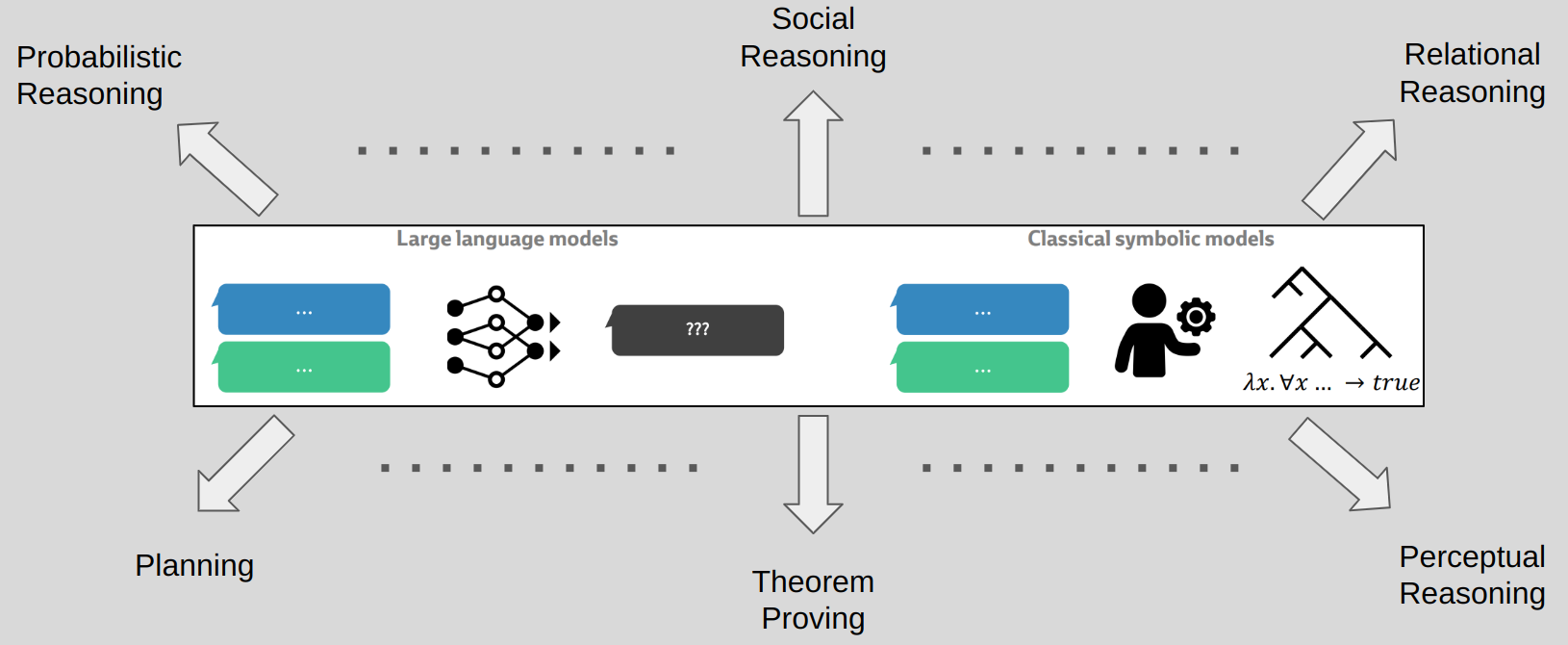

We can observe that the formal guarantees come from classical planning. Can we somehow combine the best of both? Any guesses? Here’s a hint to jog your memories.

Ring any bells now? You guessed it right.

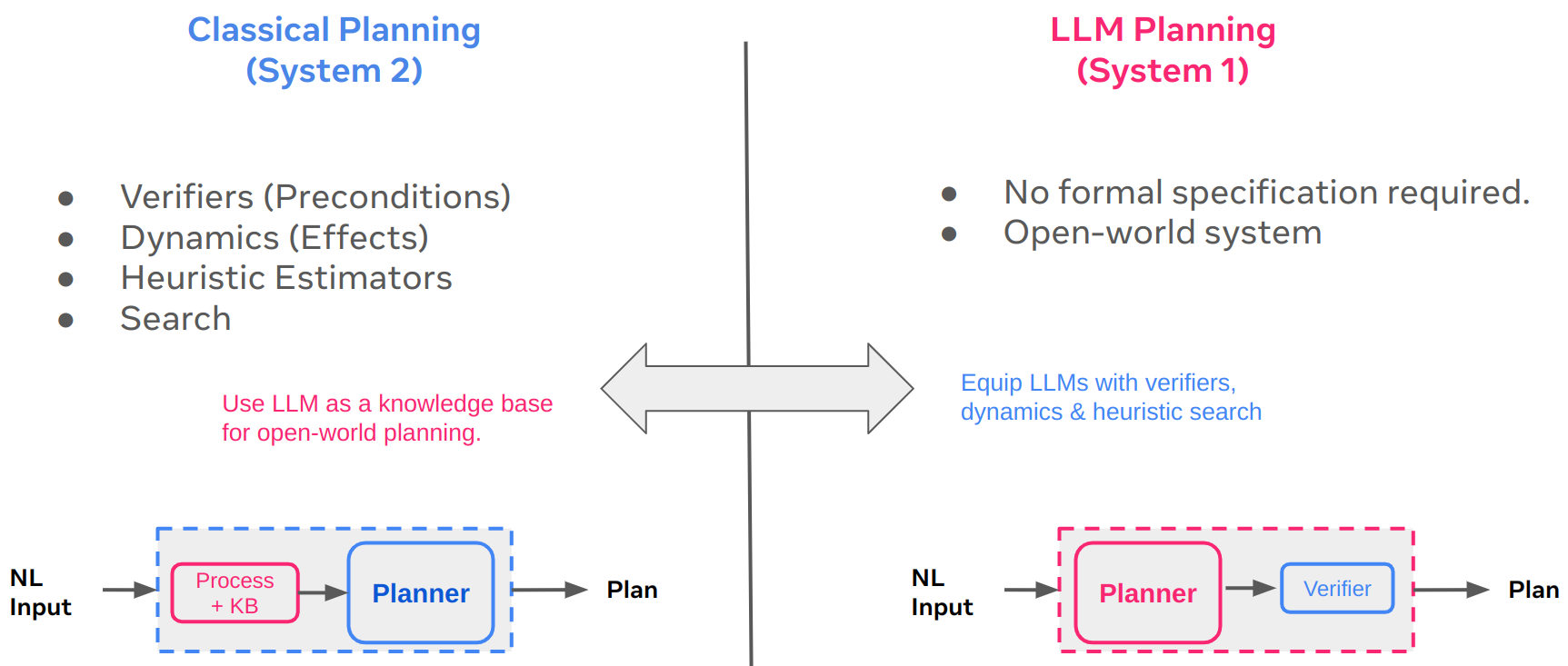

Considering LLM Planning as System 1 and Classical Planning as System 2, we look at two different ways of combining the best of both.

- Equip LLMs with verifiers, dynamics, and heuristic search.

- Use LLms as knowledge bases for open-world planning

LLMs + verifiers, dynamics, and heuristic search.

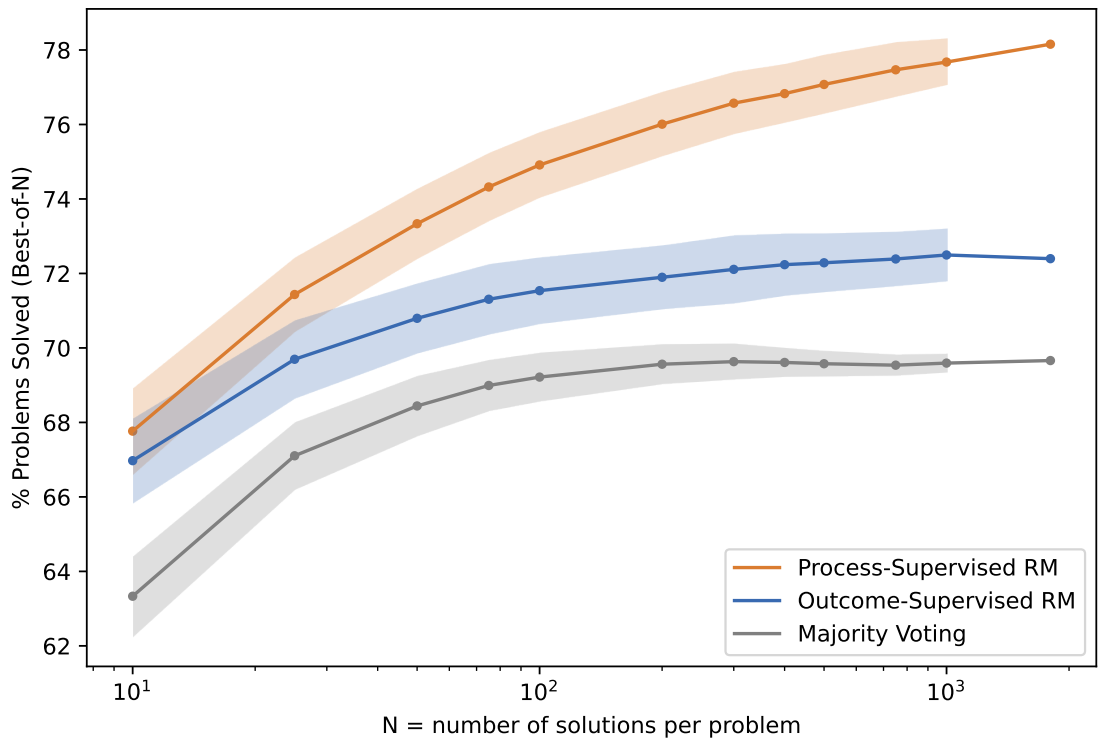

Let’s first start with some motivation. [1] shows that:

- LLMs can generate good solutions if called multiple times.

- Verifiers help discard bad candidate actions/plans.

1. LLMs + external verifiers

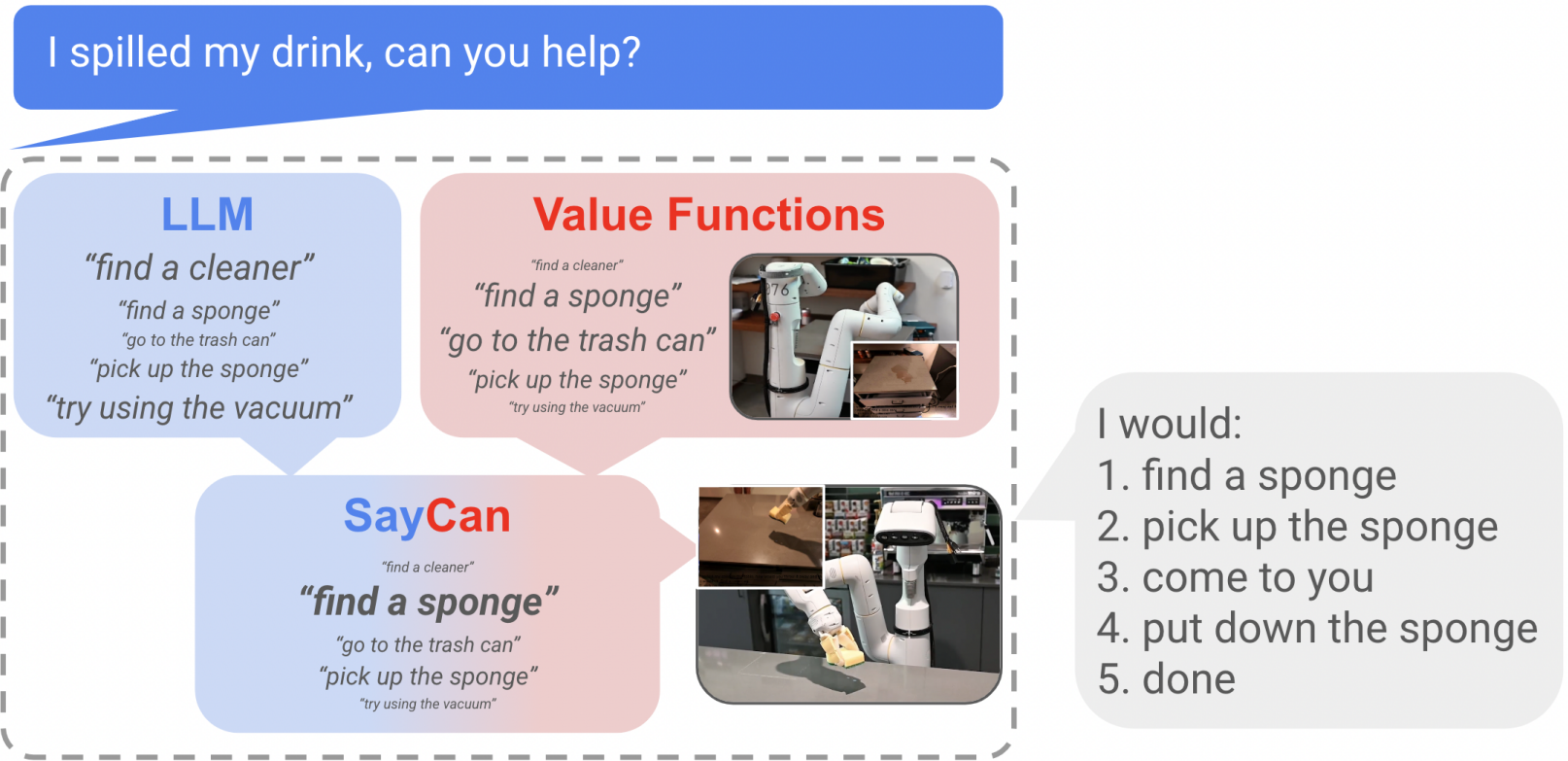

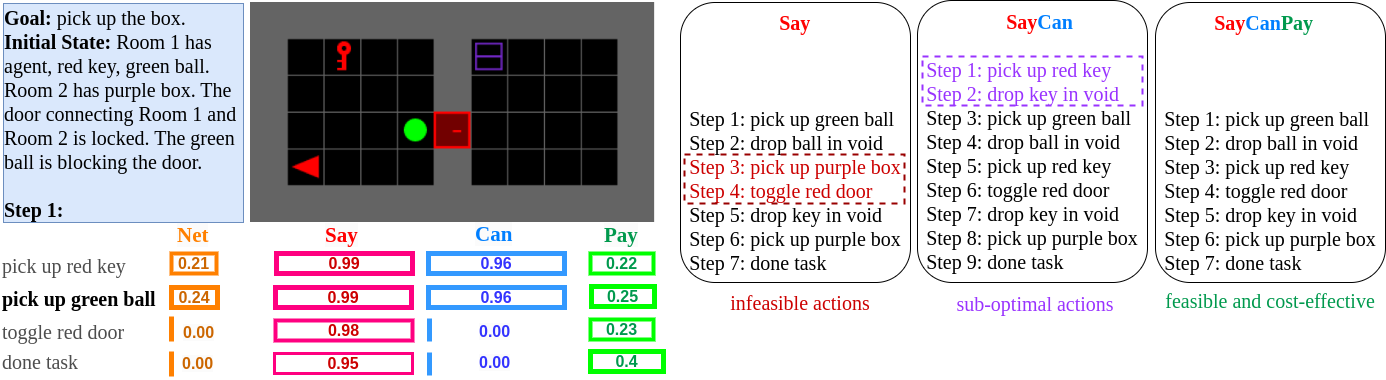

SayCan has a critical limitation: it selects actions based solely on feasibility, not their relevance to the goal. Consider this analogy: if you’re traveling from San Francisco to New York, would it make sense to fly via New Delhi simply because it’s feasible? To address this, SayCanPay[3] further adds a Pay model to estimate the payoff of an action with respect to the goal. That is, actions which are more optimal wrt the goal are more likely to be selected.

2. LLMs + external verifiers + heuristic search

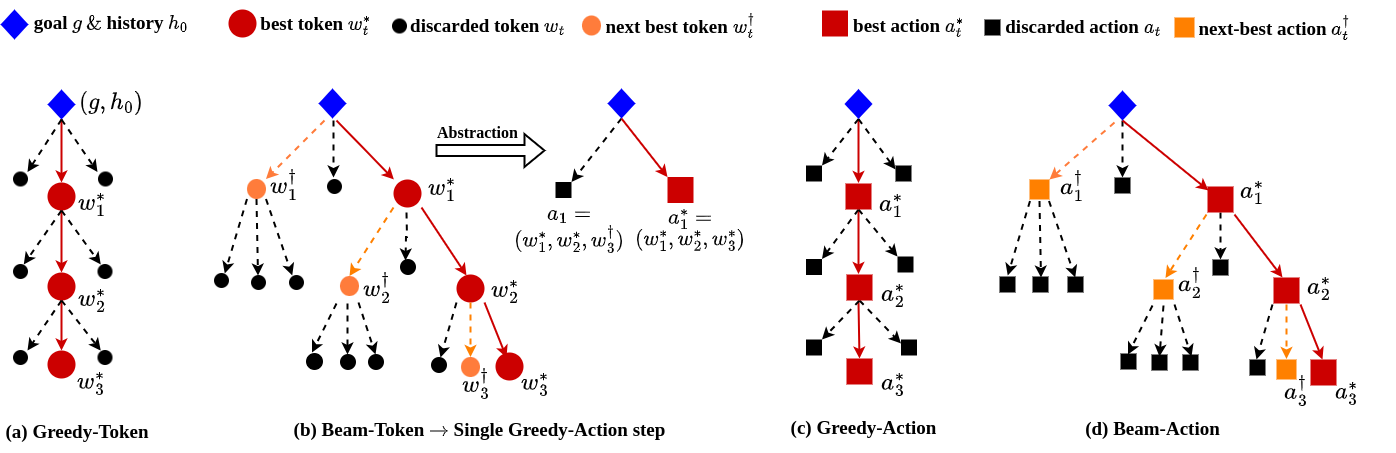

SayCanPay[3] also proposes heuristic search using an aggregated score of the Say (LLM), Can (feasibility), and Pay (optimality) models. As shown in the Figure, the Beam-Action search performs a beam search over the action. This mirrors the search in heuristic planners. They show that the overall score for each action is a sum of the aggregated score and heuristic score, akin to A* planning.

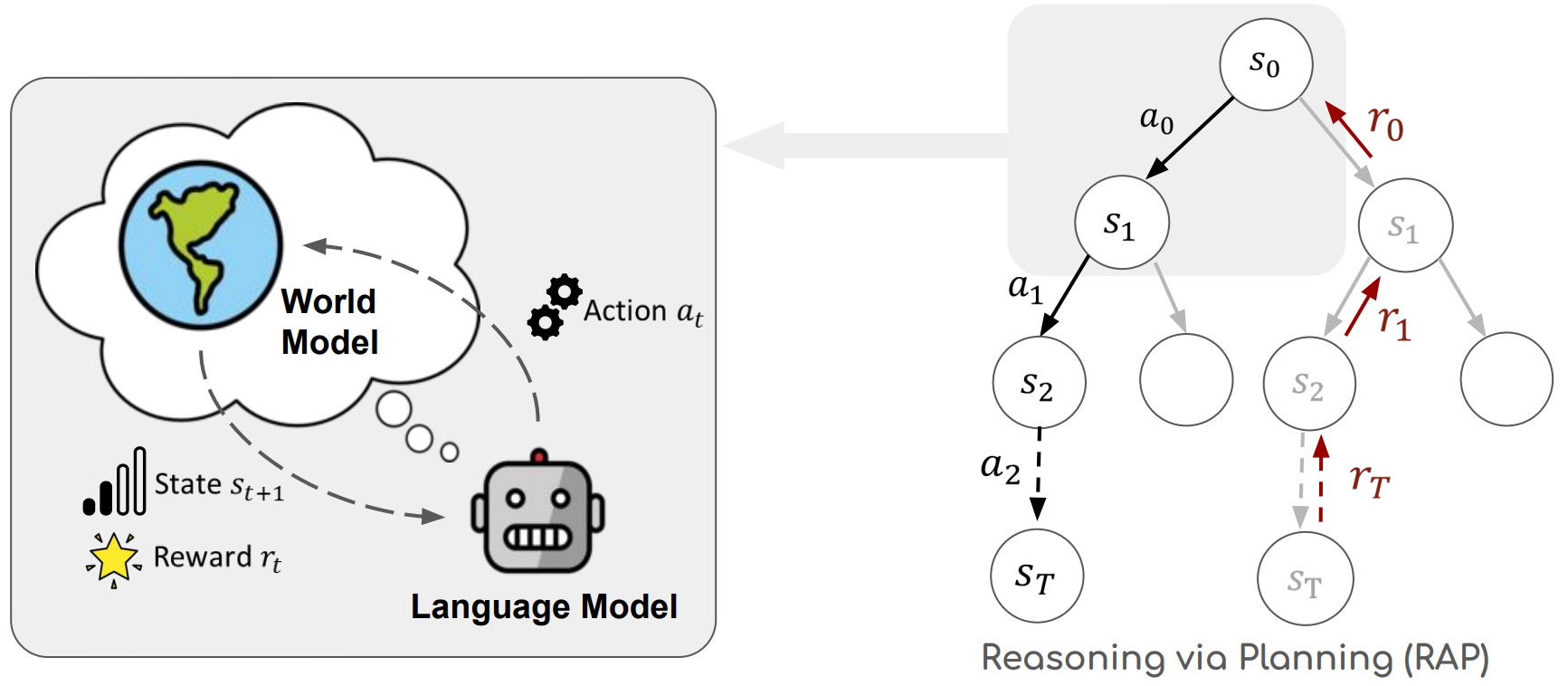

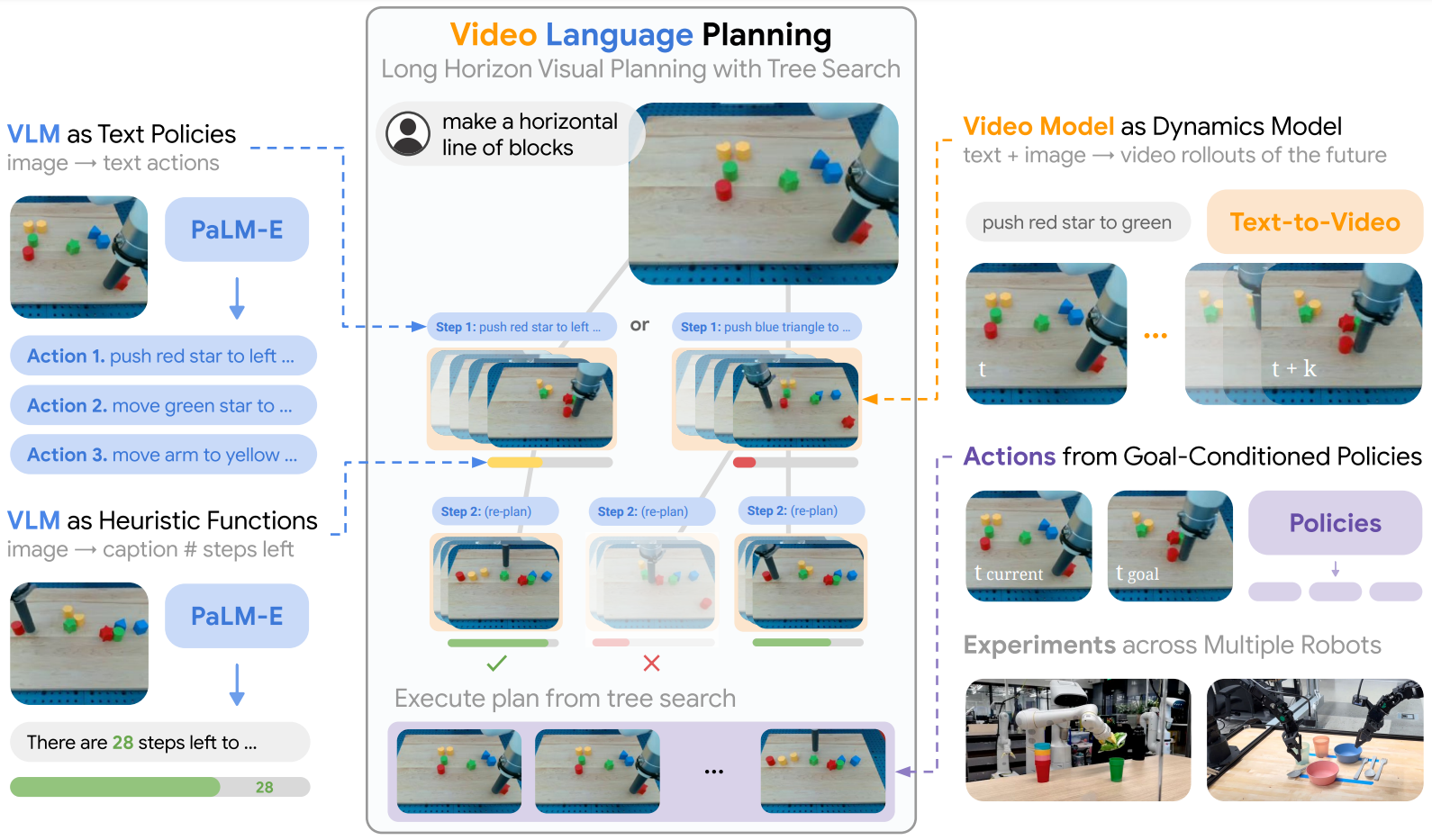

3. LLMs + dynamics models

LLMs as Knowledge Base for Planners

Perhaps, a more apt conlusion would be:

References

[1] Lightman, H. (2023). Let’s Verify Step by Step.

[2] Ahn, M. (2022). Do As I Can, Not As I Say: Grounding Language in Robotic Affordances. 6th Annual Conference on Robot Learning

[3] Hazra, R. (2023). SayCanPay: Heuristic Planning with Large Language Models Using Learnable Domain Knowledge. Proceedings of the AAAI Conference on Artificial Intelligence, 20123–20133.

[4] Hao, S. (2023). Reasoning with Language Models is Planning with World Models. Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, 8154–8173.

[5] Du, Y. (2024). Video Language Planning. The Twelfth International Conference on Learning Representations.

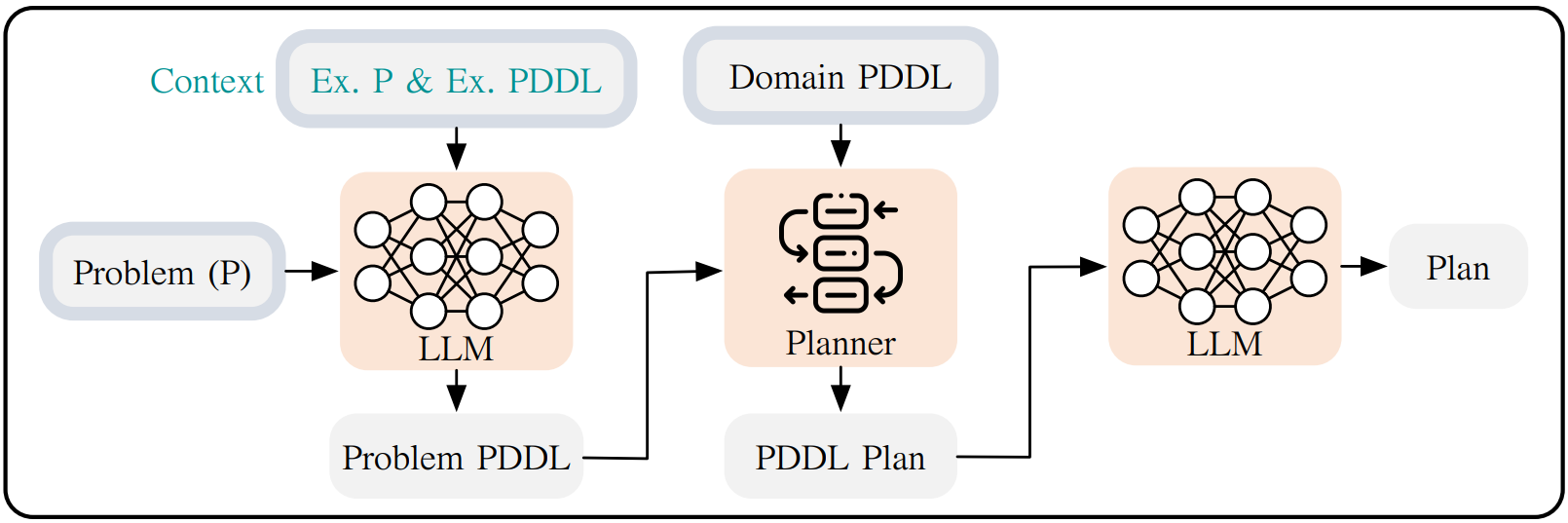

[6] Liu, B. (2023). LLM+P: Empowering Large Language Models with Optimal Planning Proficiency.

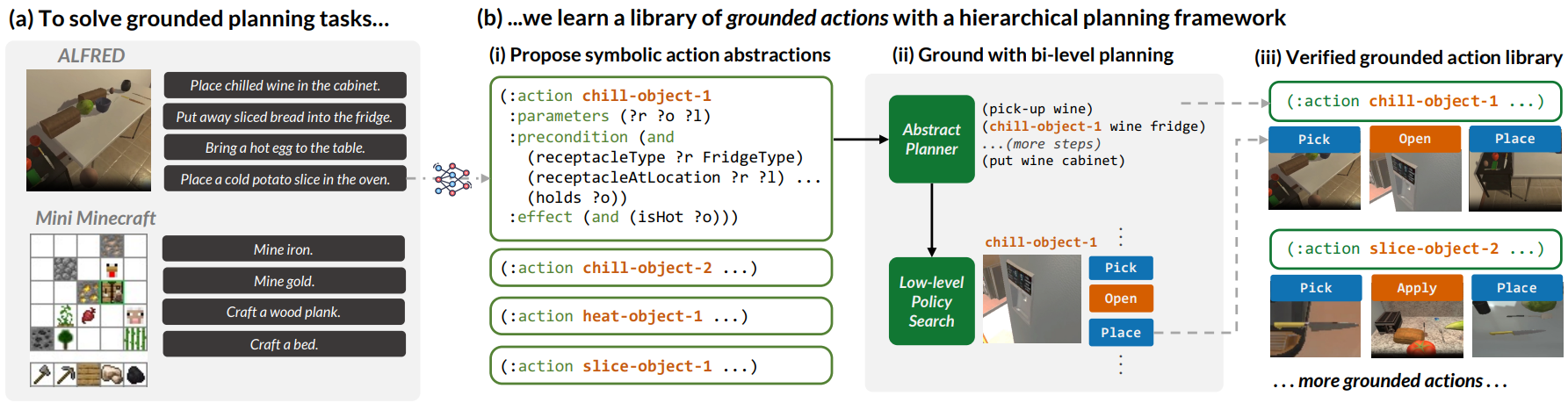

[7] Wong, L. (2024). Learning Grounded Action Abstraction from Language. The Twelfth International Conference on Learning Representations

If you found this useful, please cite this as:

Hazra, Rishi (May 2024). Fusing LLM & Classical Planning. https://rishihazra.github.io.

or as a BibTeX entry:

@article{hazra2024fusing-llm-classical-planning,

title = {Fusing LLM & Classical Planning},

author = {Hazra, Rishi},

year = {2024},

month = {May},

url = {https://rishihazra.github.io/llm-planning/2024/05/26/fusing_llms_and_planners.html}

}