Why are LLMs required for Planning?

In my talks, I often encounter a couple of recurring questions. The first is Why do I use LLMs as Planners in my works? Is it simply because they’re the latest rage. Let’s be clear – if you can do something with classical planners – don’t use a trillion parameter model for the same? That’d be like throwing a kitchen sink at it. However, as we will see, not everything can be simply solved using a classical planner.

Once we tackle that, the inevitable follow-up question arises Are they all you need for planning?

These are common questions, and many of us don’t have clear answers. Through this blog post, I aim to shed light on these inquiries. To kick things off, let’s clearly outline the key questions we’ll explore:

Q1. Why are LLMs useful for planning? Why consider LLMs over classical methods? We’ll dive into the flexibility and dynamic problem-solving capabilities of LLMs, illustrating how they adapt to new and unforeseen challenges in planning.

Q2. Are they really All You Need for planning? Can LLMs meet all your planning needs, or do they have their limitations? We’ll critically assess the strengths and potential drawbacks of relying solely on LLMs for planning tasks.

Q1. Why are LLMs useful for planning?

1. Open-world Planning

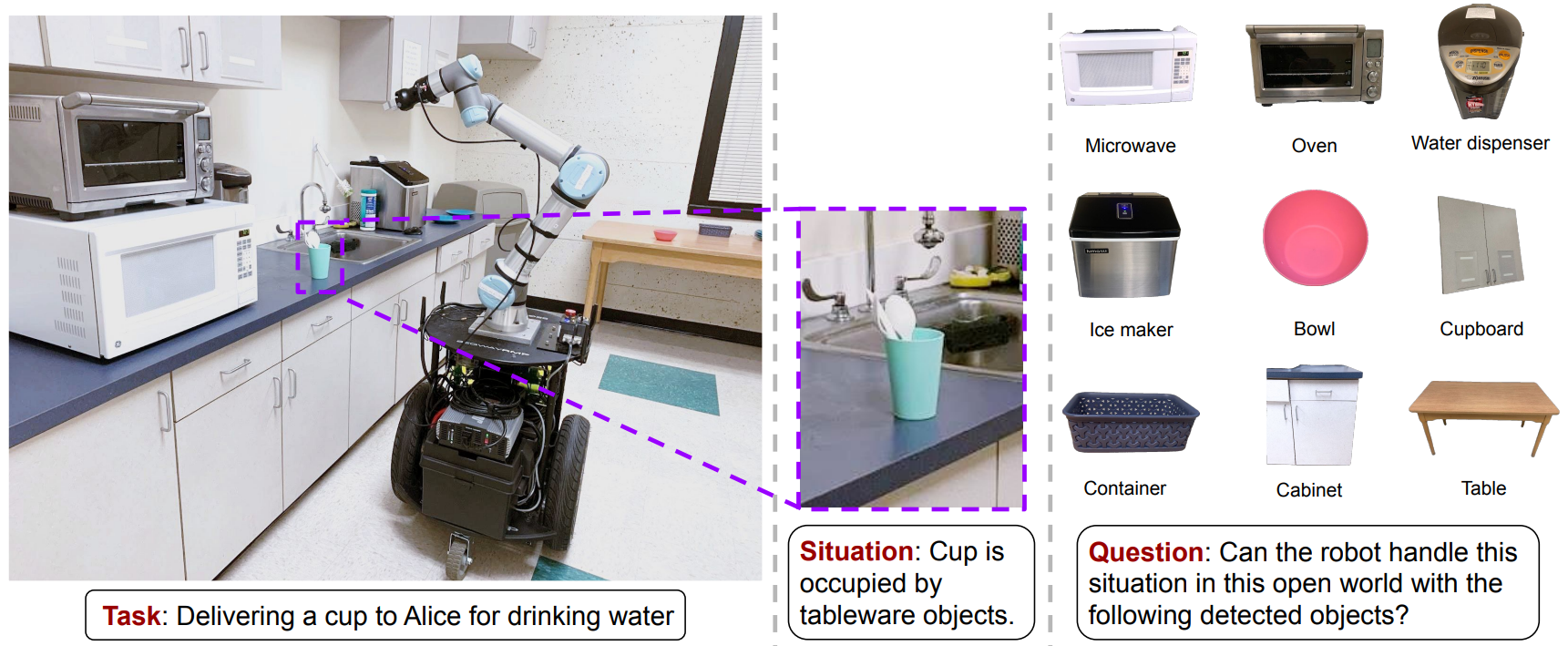

Here’s a scenario: a robot is tasked to deliver a cup for a drink, but the cup is already occupied by tableware. If the robot can’t empty the cup itself, what’s it to do? Stuck with a predefined domain, a classical planner is restricted to the predefined objects (cups and glasses) it in its domain file.

A LLM can draw from its world knowledge to suggest, “How about a bowl?”[1] As humans, we know a bowl can function much like a cup — in technical terms, they have similar affordances.

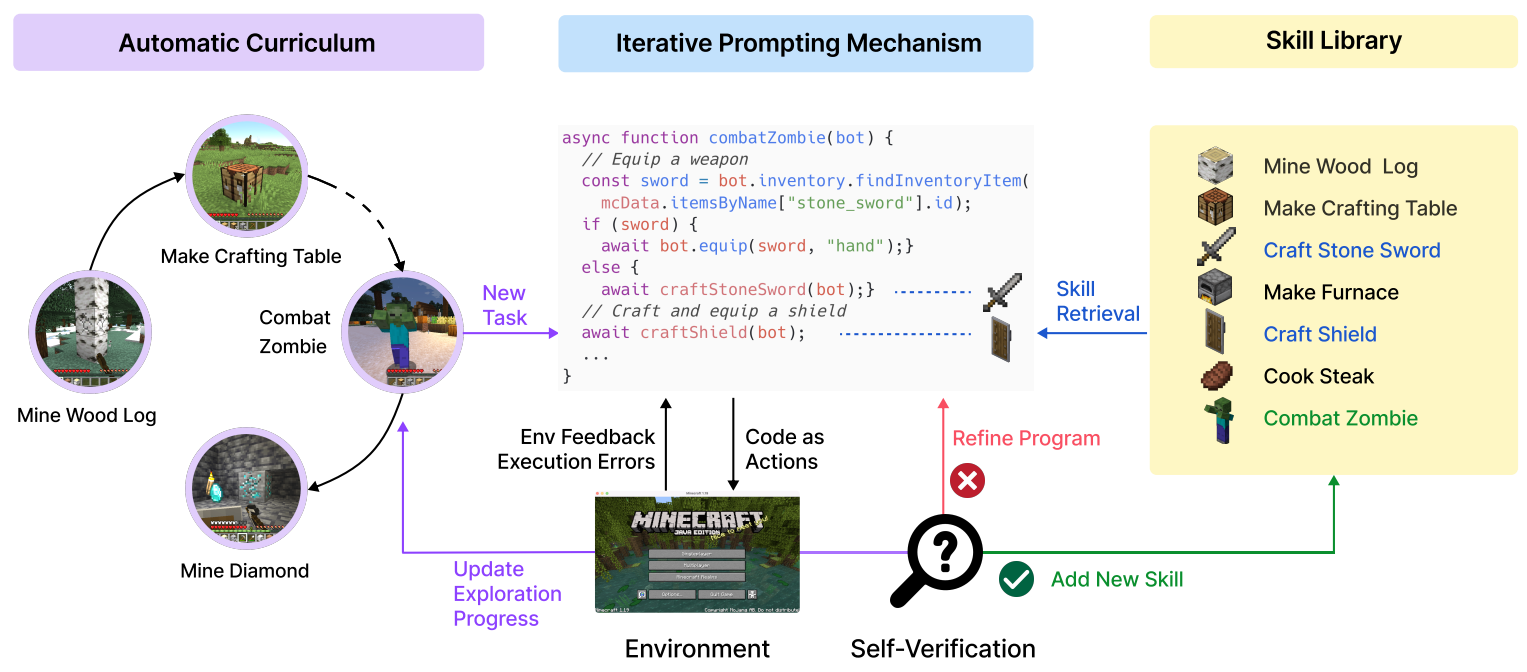

Take Voyager [2], a lifelong learning agent in Minecraft, for example. It’s a lifelong learning agent in Minecraft which designs its own curriculum, builds a skill library and constantly expands it by interacting with the environment. Each skill is defined in form of executable code blocks and more complex skills are build by composing simpler skills. For e.g., to combatZombie, the agent needs to craftStoneSword and craftShield, which in turn requires skills like mineWood and makeFurnace. So how does it work? No points for guessing that the world knowledge of the LLMs come in handy. In contrast, predefining every possible skill and scenario in a classical planner would require extensive knowledge of both the domain and the environment.

2. Handling Abstract Tasks

| Organize Closet | Browse Internet | Turn off TV |

Furthermore, LLMs, the veritable know-it-alls, can instantly generate plans for just about any task you can think of, all in zero-shot [3]. Meanwhile, classical planning requires expertly formalized goal conditions in a PDDL problem file.

3. Handling Partial Observability

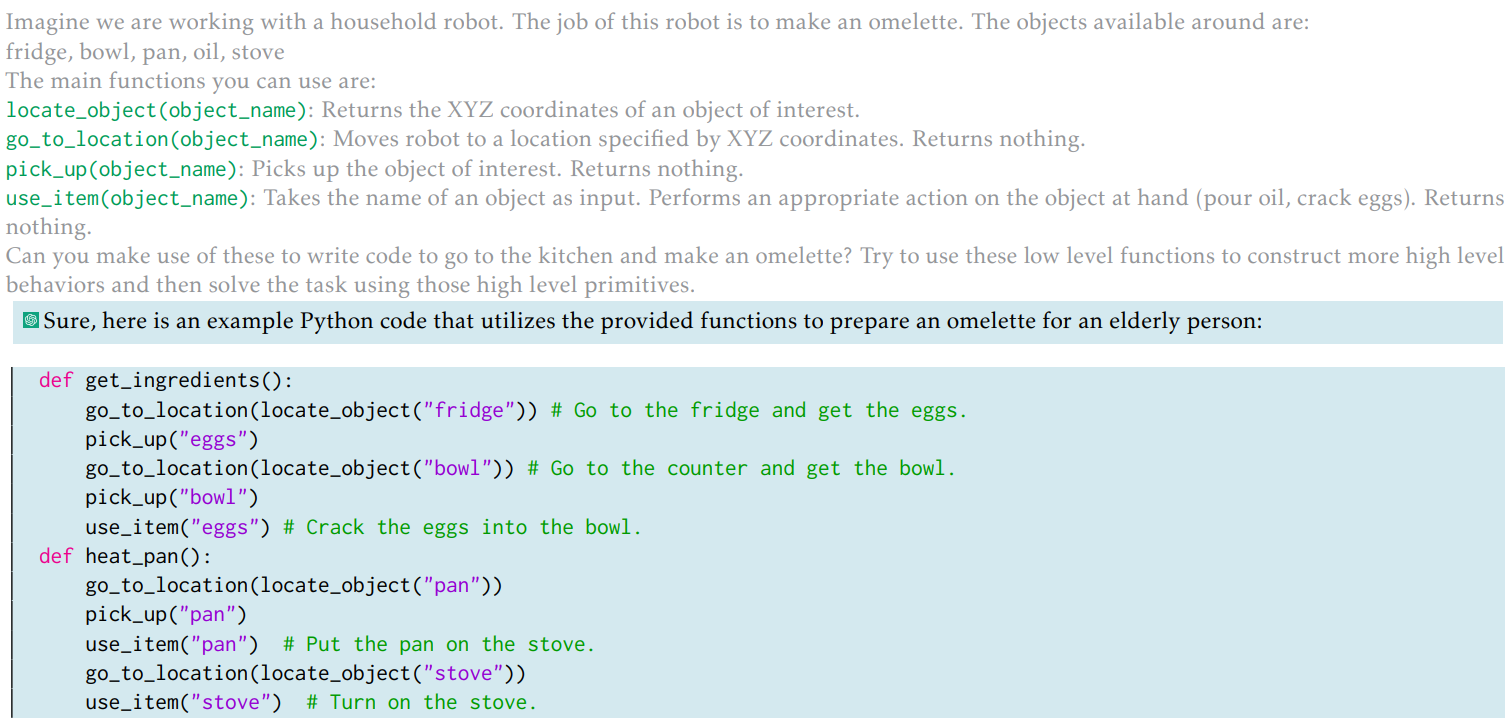

Classical planners generally work under the assumption of full observability modeled via a Markov Decision Process (MDP). Unfortunately, in the real-world, the agent’s senors provide only partial information, called observation. Such problems are modeled as a Partially Observable MDP (POMDP). At each time step, the sequence of observations made by the agent determines a probability distribution over states of the environment. Such a distribution is called a belief state. Needless to say that often leads to intractibility problems as the length of the plan grows. LLMs can use its world knowledge to handle partial observability. Think of it as a commonsense prior that helps to shape better (posterior) beliefs. For instance, given the task make an omlette, the LLM can output actions to go to the fridge since eggs are likely to be found in the fridge – even though eggs are not visible (i.e. partial observation) [4].

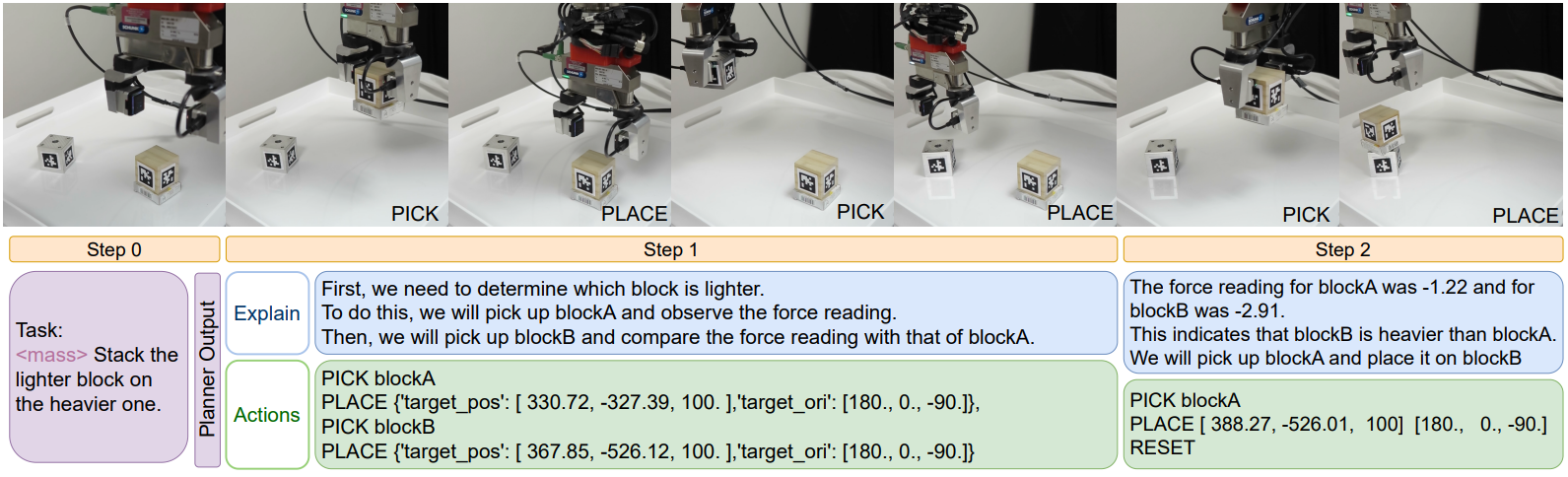

Yet another example is where the agent is given the task of stacking the lighter block on the heavier block[5]. Without the information of weights, a planner will never work. However, a LLM uses active perception to first pick up each block to register the weights, then perform the task.

Q2. Are they really All You Need for planning?

What we know so far?

| LLM Planning | Classical Planning | |

| Open-world Planning | ✅ | ❌ |

| Handling Abstract Tasks | ✅ | ❌ |

| Handling Partial Observability | ✅ | ❌ |

Note that these aspects are not outliers – on the contrary – these are pretty much the norm in the real-world. With LLMs checking all the boxes, it is tempting to declare, “An LLM is all you need for planning. Period.”

But let’s hold that thought. The world of planning doesn’t end with these three aspects. Are LLMs the be-all and end-all for planning? Maybe not just yet. Let’s explore this further and keep our tech enthusiasm in check 😉.

LLMs seem to struggle a bit [6].

In the tower of Hanoi setup with just three disks, the LLM outputs infeasible actions.

In fact, it struggles a lot [6].

Given the task to browse internet, the LLM generates actions like walk stereo and find bed which are irrelevant to the goal.

Even on simple Blocksworld [7].

Forget Super Bowl, it is more fun to watch the all powerful ChatGPT (v. Jan 30) trying to "plan" a 3 blocks configuration..

— Subbarao Kambhampati (కంభంపాటి సుబ్బారావు) (@rao2z) February 12, 2023

tldr; LLM's are multi-shot "apologetic" planners that would rather use you as their world model cum debugger.. pic.twitter.com/eYIeEVBJli

And neither Finetuning[8], nor clever Prompting[9] helps.

- On simple blocksworld, finetuned GPT-3 could only solve 122/600 (20%)[8].

- GPT-4 could only solve 210/600 (34%) in zero-shot and 214/600 (35.6%) with Chain-of-Thought (CoT) prompting[8].

- LLMs perform poorly at verifying solutions (graph colorings[9]), hence showing they cannot self-critique and improve.

Comparing to human performance:

- A group of 50 human planners were tested.

- 39 (78%) came up with valid plans.

- 35 (70%) came up with optimal plans.

So what do we have now?

| LLM Planning | Classical Planning | |

| Open-world Planning | ✅ | ❌ |

| Handling Abstract Tasks | ✅ | ❌ |

| Handling Partial Observability | ✅ | ❌ |

| Feasibility | ❌ | ✅ |

| Optimality | ❌ | ✅ |

LLMs are useful for planning. However, there are no formal guarantees.

Is it possible to enhance LLMs to have some formal guarantees?

Find out in the next installment of our series: Fusing LLM and Classical Planning

References

[1] Ding, Y. (2023). Integrating action knowledge and LLMs for Task Planning and situation handling in open worlds. Autonomous Robots, 47, 981-997.

[2] Wang, G. (2024). Voyager: An Open-Ended Embodied Agent with Large Language Models. Transactions on Machine Learning Research, 2835-8856.

[3] Huang, W. (2022). Language Models as Zero-Shot Planners: Extracting Actionable Knowledge for Embodied Agents. Proceedings of the 39th International Conference on Machine Learning, PMLR 162:9118-9147.

[4] Vemprala, S. (2023). ChatGPT for Robotics: Design Principles and Model Abilities.

[5] Sun, L. (2023). Interactive Planning Using Large Language Models for Partially Observable Robotics Tasks.

[6] Hazra, R. (2023). SayCanPay: Heuristic Planning with Large Language Models Using Learnable Domain Knowledge. Proceedings of the AAAI Conference on Artificial Intelligence, 20123–20133.

[7] Valmeekam, K. (2023). PlanBench: An Extensible Benchmark for Evaluating Large Language Models on Planning and Reasoning about Change. NeurIPS 2023 Track on Datasets and Benchmarks.

[8] Kambhampati, S. (2023). On the Role of Large Language Models in Planning. ICAPS 2023 Tutorial

[9] Stechly, K. (2023). GPT-4 Doesn’t Know It’s Wrong: An Analysis of Iterative Prompting for Reasoning Problems. NeurIPS 2023 Foundation Models for Decision Making Workshop

If you found this useful, please cite this as:

Hazra, Rishi (May 2024). Why are LLMs required for Planning?. https://rishihazra.github.io.

or as a BibTeX entry:

@article{hazra2024why-are-llms-required-for-planning,

title = {Why are LLMs required for Planning?},

author = {Hazra, Rishi},

year = {2024},

month = {May},

url = {https://rishihazra.github.io/llm-planning/2024/05/26/llms_as_planners.html}

}